Databricks Lakehouse: Next Level of Data Brilliance

Databricks Lakehouse is the new architecture used for data management which merges the best parts from Data Warehouse with the best parts from Data Lake. It combines ACID transactions and data governance of DWH with flexibility and cost efficiency of Data Lake to enable BI and ML on all data. It keeps our data in massively scalable cloud object storage in open-source data Standards. Lakehouse radically simplifies the enterprise data infrastructure and accelerates innovation in an age when ML and AI are used to disrupt any industry. The data Lakehouse replaces the current dependency on data lakes and data warehouses for modern data companies that require.

- Open, Direct access to data stored in standard data formats.

- Low query latency and high reliability for BI and Analytics.

- Indexing protocols optimized for ML and Data Science.

- Single source of truth, eliminate redundant costs, and ensure data freshness.

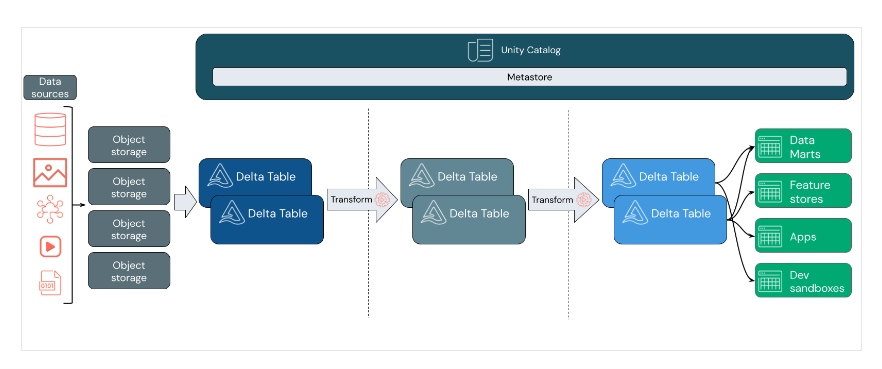

Fig. A simple flow of data through Lakehouse

Fig. A simple flow of data through Lakehouse

Components of Lakehouse

1. Delta Table

With the help of Delta tables, we can enable downstream data scientists, analysts and ML engineers to leverage the same production data which is used in current ETL workloads as soon as it is processed. Delta table takes care of ACID transactions, Data Versioning and ETL. Metadata used to reference the table is added to Meta store in declared schema.

2. Unity Catalog

It ensures that we have complete control over who gains access to which data and provides a centralized mechanism for managing access control without needing to replicate data. Unity Catalog provides administrators with a unified location to assign permissions for catalogs, databases, table and views to group of users.

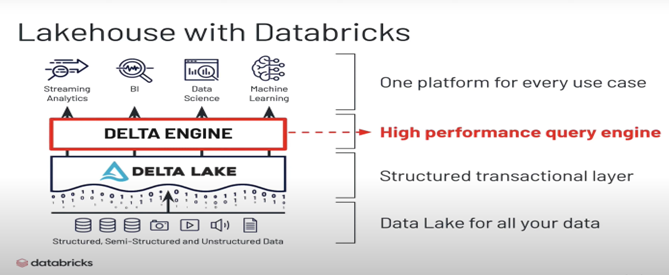

Delta Lake

Delta lake is a file based, open-source storage format that provides ACID transactions and scalable metadata handling, unifies streaming and batch data processing. It runs on top of existing data lakes. Delta lake integrates with all major analytics tools.

Fig. Lakehouse with Databricks

Medallion Lakehouse Architecture – Delta Design pattern

The Medallion architecture describes the series of data layers that denote the quality of data stored in Lakehouse. The term Bronze, Silver and Gold describe the quality of data in each of these layers. We can make multiple transformations and apply business rules while processing data through the different layers. This multilayered approach helps to build a single source of truth for enterprise data products.

- Bronze – Raw data ingestion.

- Silver – Validated, Filtered data.

- Gold – Enriched data, Business level aggregates.

Data Objects in Databricks Lakehouse

The Data Bricks Lakehouse organizes data stored with Delta Lake in cloud object storage with familiar relations like database, tables and views. There are Five primary objects in Databricks Lakehouse.

- Catalog

- Database

- Table

- View

- Function

Lakehouse Platform Workloads

- Data Warehousing

- Data Engineering

- Data Streaming

- Data Science & Machine Learning

Pros

- Adds Reliability, performance, governance and quality to existing data lakes.

- ACID Transactions

- Handling large metadata

- Unified data teams

- Reducing the risk of vendor lock-in

- Storage is decoupled from Compute.

- ML and Analytics support

Cons

- Complex setup & Maintenance – The platform can be complex to set up and maintain, requiring specialized skills and resources.

- Its advanced capabilities may not be suitable for some lower functionalities use cases.

The purpose of this post is to provide a broad overview of Databricks Lakehouse. Please get in touch with us if our content piques your interest.

Ready to get started?

From global engineering and IT departments to solo data analysts, DataTheta has solutions for every team.