Blogs

1. Introduction

Jaipur is rapidly growing as a technology and analytics hub, with more businesses using data to improve decisions as well as performance. Many startups, mid sized companies, and large enterprises in Jaipur depend upon data analytics partners to understand the customers, track operations and also for the future planning. Data analytics companies in Jaipur help organisations in turning raw data into clear and meaningful insights using services such as business intelligence, data engineering, AI solutions etc. These services help better planning and also better decision making. This list below highlights the top 07 data analytics companies in Jaipur that are well known for their skills, reliability and successful delivery. Good analytics partners provide long term growth, smarter strategies as well as measurable business outcomes.

2. What is AI and Data Analytics in Jaipur?

Data analytics is the process of collecting, preparing and analyzing the business data in order to discover certain types of hidden trends, patterns and insight. It helps in the proper planning and making decisions for the end business growth. Data analytics basically consists of data integration, predictive modeling, performance measurement, visual reporting and a list of tools & technologies to facilitate the business objectives of various departments. Leading data analytics companies in Jaipur utilize a combination of tools & technologies to help different industries like healthcare, energy, E-commerce & logistics, Manufacturing, Banking & FinTech etc. to solve their business problems.

3. Top Data Analytics Companies in Jaipur

3.1) DataTheta

DataTheta is a decision sciences and analytics consulting firm that is headquartered in Texas USA, and has delivery centres in Jaipur. When the enterprises need reliable, governed as well as scalable analytics environments, and not just dashboards, they choose DataTheta. DataTheta is known for its strong focus on governance, compliance, security and scalability. The DataTheta team of senior data engineers & scientists brings together more than 10 years of average experience. It ensures high-quality delivery across complex analytics initiatives. They have successfully delivered 80+ data projects with a 98% client satisfaction rate. DataTheta combines industry expertise with flexible engagement models.

These are:- fixed-time projects, managed services, and developers-on-demand (also known as fixed time resource).

This agile approach helps the businesses to modernize their analytics capabilities efficiently along with properly maintaining control over cost and better performance.

3.2) Navsoft

Navsoft is a global technology company with more than 25 years of experience helping businesses grow using simple, practical digital solutions. The company supports organizations in big data and analytics, ERP, CRM enabling them to use data in an effective manner and also make better business decisions.

Trusted by Leading Brands Worldwide

• Worked with fortune 500 companies

• Solutions actively used in 33+ countries

Making AI Simple and Useful for Businesses

GenAI Solutions

Custom large language models built for real business needs and also used for customer support automation, content creation etc.

Conversational AI

Questions can be asked in any language, insights can be directly sourced from business data, and helps the teams in making faster and better decisions.

Predictive Analytics

Uses historical data to forecast trends, risks and opportunities are identified early and also supports better planning and decision making.

Data Engineering

Builds reliable as well as scalable data pipelines, and ensures that analytics and AI systems run smoothly in a real environment.

Computer Vision

Helps in converting existing CCTV systems into smart monitoring tools and also tracks safety and unusual activities.

AI Consulting

Practical AI strategies aligned with clear business goals.

Min project size: $10,000+

Hourly rate: $25 - $49 / hr

Employees: 250 - 999

Year founded:- 1999

3.3) Edvantis

Edvantis is a global software engineering company that has more than 400 skilled professionals working across USA as well as Europe. It helps the businesses in building high quality software products on time and scale. It has a delivery and hiring hub in jaipur that helps in building scalable & robust data analytics programs which improves the optimal operational measurements, risk assessment and reporting systems.

Tech Expertise:

Software Engineering Services:

Includes software architecture and software development, quality assurance and testing, business analysis and IT operations.

BI & Data Analytics:

Includes Data integration and data warehousing, data science and machine learning, predictive analysis and advanced data visualisation.

Artificial Intelligence (AI):

Includes AI assisted software development, Natural Language Processing, Voice and speech recognition.

Service Models:

- Dedicated teams work with more than 1 week hiring time.

- Fixed-Price Project led by Agile, Scrum and PMI certified experts

- Digital Transformation & IT Consulting

Focus Industries:

- Hi-Tech

- HealthTech

- Real Estate

- Public Sector

- Supply Chain & Logistics

Why Edvantis?

- On time delivery with agile planning

- More than 72% senior and expert level engineers

- Strong security standards including DSS, GDPR, HIPAA etc.

- Flexible engagement with free consulting.

Min project size - $10,000+

Hourly rate- $25 - $49 / hr

Employees - 250 - 999

Year founded - Founded 2005

3.4) Massive Insights

Massive Insights has been helping the companies to use data effectively since 2012. The company works with data rich organisations to turn information into clear insights as well as business results. Massive insights mainly focus on speed, clarity and execution.

What Massive Insights Do:-

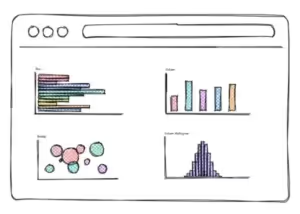

BI & Dashboards - Helps in building simple and clear dashboards that are actually used by people and helps teams take actions based on the data.

Data Strategy - Creates practical data roadmaps, and aligns data work with real business goals.

AI & ML Enablement - Starts with small, useful use cases and proves value quickly and scales over time.

Cloud Data Architecture - Design modern, scalable data systems and focus on speed, reliability and trust.

Platform Modernization & Adoption - Provides dedicated or part time analysts and architects. Also helps the teams in getting more value from existing tools.

Why Massive Insights?

Proven experience - They have an experience of more than 12 years in analytics. They have completed more than thousands of successful projects.

Business-First Approach - Primarily focuses on solving real business problems and every project is tied to measurable outcomes.

Fast and Focused - They have MVP driven approach

Stakeholder-Ready Solutions - Helps the internal teams in supporting marketing, finance and operations. It also delivers insights that are easy to understand and act on.

Min project size- $25,000+

Hourly rate - $25/hr

Employees - 10 - 49

Year founded - Founded 2012

3.5) Scalo

Scalo is a Polish software development company that helps in building modern, future ready digital solutions for businesses. They have an experience of more than 17 years with more than 750 successful projects. Scalo is well known for delivering reliable and scalable technology that grows with changing business needs.

Scale provide services such as:

- They offer custom software development that adapts as the business grows.

- Data & AI solutions that turn data into clear and relevant insights.

- IT staff augmentation to add skilled technology experts to the team.

Building Future-Ready Software

- Design solutions that work today and scale for tomorrow.

- Adapts easily to new technologies and changing markets

- Helps businesses stay competitive over the long term.

Areas of Expertise:-

Digital Innovation & AI - Provides smart automation solutions and data driven prediction software..

Cloud & DevOps Excellence - Provides full stack software development and cloud performance and cloud optimisation.

Data Engineering & Analytics - Helps in processing and usage of big data and also builds reliable pipelines.

Digital Experience Design - User focused design using a clean and intuitive interface.

Software Architecture & Integration - API development and system integration.

Partnerships & Certifications

- Has an expertise in Microsoft Azure and AWS cloud platforms.

- Partnership with AB Initio and Form.io for data governance

- ISO 27001 certified for information security.

Min project size - $10,000+

Hourly rate - $50 - $99 / hr

Employees - 250 - 990

Year founded - Founded 2007

3.6) Reenbit

Reenbit is an AI driven software development and data engineering company based in eastern Europe. It helps the businesses in building, modernizing and scaling digital products from enterprise software to data platforms that support faster as well as smarter decisions.

Reenbit is an AI focused software and data engineering company. They have a team of more than 100 engineers, and an experience of more than 7 years. They are partners of Microsoft and also ISO 27001:2022 certified for security. They work as a long term technology partner.

What Reenbit does-

- They develop custom software for enterprise applications.

- They use data engineering and AI to improve data visibility and decision making.

- Cloud engineering on Microsoft Azure.

- Process automation to improve efficiency.

Industries Served -

- Retail and e-commerce

- Energy

- Healthcare

- Logistics

They have delivered more than 70 projects worldwide and help the companies in improving operations, scalability and data clarity. Companies choose Greenbit because they have a strong engineering culture with a business first mindset. They focus on real business outcomes and not just codes.

Min project size - $25,000+

Hourly rate - $25 - $49 / hr

Employees - 50 - 249

Year founded - Founded 2018

3.7) Data Understood

Data Understood is a data analytics consulting firm that helps organisations use their data in the right way to achieve real business goals.

What Data Understood does -

- It helps the businesses in finding clear direction from large and complex data.

- They helps to turn useful insights that support today’s needs and future growth.

- Keeps the organisation flexible and agile as data and technology evolve.

Why Data Strategy matters -

- Data is a valuable business asset when it is used correctly

- Many companies adapt AI and analytics too quickly.

- Without a clear strategy, this leads to confusion as well as wasted effort.

Data understood builds clear data strategies aligned with business goals. They ensure that the data is well structured, governed and reliable. They also help in creating a data driven culture across teams. When the organisations need smarter decision making, better efficiency and stronger competitive advantage they choose Data understood.

Min project size - $10,000+

Hourly rate - $100 - $149 / hr

Employees - 25 - 50

Year founded - Founded 2018

Related Post:- Top Data Analytics Consulting Companies for Enterprises in India

4) Conclusion

The data analytics ecosystem in Jaipur is still growing at a rapid pace with more enterprises needing to find the structured analytics support, prediction systems, and performance measurement tools. In the above article, I have listed some of the reliable companies in Jaipur that provide data analytics services like data engineering, predictive modeling, reporting structures, consulting etc. They have prior experience in working with small & medium size (SMB) and large scale enterprises in solving their business problems using the help of advanced technology stack. You can check these analytics companies capabilities, pricing structure, data engineer experience & expertise and take a final call while selecting the best data analytics partner in Jaipur for your business.

1) Introduction

Ahmedabad has become an important centre for manufacturing and retail businesses. Companies are increasingly using data to improve efficiency, reduce costs and understand customers. As the factories and supply chains are generating a large amount of data, businesses need data analytics partners who can turn this information into clear and practical insights. Managing this data without analytics often leads to delays, wastage and also missed opportunities. Data analytics companies in Ahmedabad helps manufacturing and retail organisations in tracking production performance, forecasting demand, managing inventory and in improving customer experience. They help manufacturers and retailers make sense of this data. They convert raw numbers into simple reports, dashboards and forecasts that show what is working, what is not and improvements. Their services include business intelligence dashboards, data engineering, predictive analytics designed for real operational needs. This list highlights the top data analytics companies in Ahmedabad that help in supporting the manufacturing and retail businesses in making faster, smarter and more reliable decisions.

Read more :- Top Data & AI Solution Companies in India (Ranked)

2) What is Data Analytics?

Data analytics is the process of collecting, preparing and analyzing the business data in order to discover certain types of hidden trends, patterns and insight. It helps in the proper planning and making decisions for the end business growth. Data analytics in Ahmedabad basically consists of data integration, predictive modeling, performance measurement, visual reporting and a list of tools & technologies to facilitate the business objectives of various departments. Leading data analytics companies in Ahmedabad utilize a combination of tools & technologies to help different industries like healthcare, energy, E-commerce.

3) Top Data Analytics Companies In Ahmedabad

3.1) DataTheta

DataTheta is a data analytics consulting firm that has delivery centres in Ahmedabad, India. When companies need reliability, security and scalable data environments they choose DataTheta. They don’t only make basic dashboards. The team consists of experienced data engineers and data scientists that have more than 10 years experience. DataTheta ensures high quality execution especially for complex data as well as AI projects. They have a strong focus on governance, compliance as well as security. DataTheta has delivered more than 80 projects and has a client satisfaction rate of 98%.

DataTheta offers flexible working models based on client needs

- Fixed time projects

- Managed analytics services

- Developers on demand.

This flexible approach helps the businesses in modernizing their analytics systems efficiently along with maintaining cost controls.

3.2) Spec India

Spec India is a software development company established in 1987. Over the years the company has grown by adopting modern technologies and AI driven development. They help in building secure, scalable as well as practical digital solutions while ensuring the privacy of data and enterprise level security. SPEC India has a skilled team of developers who focus on creating smart, easy to use and reliable applications. They understand the business needs first and then build the solutions accordingly.

They work globally with many companies such as the USA, UK, Europe, Germany, Canada etc.

Their clients include-

- Fortune 100 and 500 companies

- Startups

- Small and medium enterprises.

SPEC India works across many industries such as -

- Healthcare

- Finance and insurance

- Retail

- eCommerce

- Sports

SPEC India is chosen by many businesses because they have a good hold across the industry. They have more than 3000 projects delivered, They have already served more than 40 companies, they have a 200 plus client rate etc. They build intelligent and AI powered solutions while ensuring data security.

Min project size - $5,000+

Hourly rate - $25 - $49 / hr

Employees - 250 - 999

Year founded - Founded 1987

3.3) Cygnet.One

Cygnet.One is a global digital transformation and engineering company that helps mid-sized and large enterprises in using data, AI and cloud technologies in order to improve business performance. Cygnet.One helps businesses in organising scattered data systems. They help the companies in building strong data foundations that support growth, governance and help in making smarter decisions. They also help the organisations in adopting artificial intelligence in a responsible way by providing them AI consulting and implementation services.

These AI solutions help in-

- Improving business decisions

- Supporting generative AI use cases

- Enabling intelligent workflows

They also provide cloud engineering services such as -

- Cloud Migration

- Cloud optimisation

- Secure and scalable platform setup

Min project size - $10,000+

Hourly rate - $25 - $49 / hr

Employees - 1,000 - 9,999

Year founded - Founded 2000

3.4) RadixWeb

RadixWeb is a Ahmedabad based company that helps the organisations in providing analytics as well as data solutions. They support end to end analytics work that includes data strategy, analytics dashboards, data engineering etc. They help in building systems that trunks the data into clear insights and smarter decision making tools. When the businesses or the companies look for reliable analytics and data driven transformation support, they usually go for RadixWeb. It helps the businesses in combining the data from various sources, preparing it for reporting, building analytics dashboards etc.

Min project size - $25,000+

Hourly rate - $25 - $49 / hr

Employees - 250 - 999

Year founded - Founded 2000

3.5) Analytics Liv Digital LLP

Analytics Liv Digital LLP is a google certified and met business partner that helps businesses grow using data driven marketing strategies. They combine SEO, Google Analytics and CRO to understand what is working, to identify gaps and make practical changes that increase the revenue.

They mainly focus on-

- Increasing conversions

- Reducing customer acquisition costs.

- Helping brands in making clearer decisions.

- Improve overall marketing performance.

They mainly help by using data to find what is leaking in markets, by fixing issues using SEO, ads and UX improvements and by running focused experiments that are tied directly to the business goals. The decisions are guided by real data instead of assumptions.

Min project size - $1,000+

Hourly rate - $50 - $99 / hr

Employees - 10 - 49

Year founded - Founded 2021

3.6) Elegant MicroWeb

Elegant MicroWeb is a software products and services company focused on delivering real value through technology. It works with customers across the USA, Europe, Japan and India supporting businesses of all sizes. They have years of experience of delivering technology solutions worldwide.

The company mainly wants to make technology simple to use and also easy to deploy. They provide feature- rich solutions in a stable and reliable environment. They offer services such as application development and maintenance, mobile application development and IT consulting services.

Industry and client experiences -

- Works with SMEs, software companies and web agencies

- Experience across multiple industry as well as business models

- Handles projects of all sizes and complexity

This company ensures proven delivery and product delivery processes. Their team is full of skilled and professional members. They make sure that the delivery is on time along with a strong focus on quality. Elegant MicroWeb helps the businesses in building and maintaining reliable software solutions by making technology easy, effective as well as valuable.

Min project size - $5,000+

Hourly rate - $25 - $49 / hr

Employees - 50 - 249

Year founded - Founded 2001

4) Conclusion

Ahmedabad is a strong hub for data analytics and BI services, with many firms that support the enterprises in forecasting, customer insights and cloud data integration. Many companies in Ahmedabad offer similar analytics capabilities, but we can differentiate between a good and a bad company on the basis of some factors such as industry understanding, clear ownership of models as well as secure data handling. The firms listed above are trusted by sectors such as finance, retail and media because these firms deliver structured data pipelines and reliable BI reporting. Choosing a right data analytics partner is very important as it leads to smoother planning and better adoption of analytics, and becomes a long term partner that helps in reducing uncertainty and improving decision making.

1. Introduction

Pune is one of the leading cities in India for IT services and manufacturing industries. Pune is home to many software companies, engineering firms and technology startups etc. As the businesses are growing at a rapid pace, they are also generating large amounts of data from operations, customers, digital platforms etc. However, having access to data is not enough, the companies must also have the right tools and expertise in understanding and using the data in an effective way. This is where the Data Analytics and Business Intelligence companies play an important role. These firms help the business in collecting, organising and analysing the data in order to create reports and dashboards. Analytics help the companies in improving production efficiency, customer behavior as well as project performance. Data analytics and BI companies in Pune help the IT and manufacturing businesses in making smarter decisions and improving performance.

2. Top 10 Data Analytics & Business Intelligence Companies in Pune for IT & Manufacturing Businesses

2.1) DataTheta

Data Analytics is a data and analytics consulting company that has an office in Pune. The company helps the businesses in making sense of data that is spread across many systems and tools. Many organisations struggle because their data is scattered, unorganised and also hard to trust. DataTheta helps the businesses in bringing all this data together into one clean and reliable system so the decision makers can clearly see the things happening in their business.

What DataTheta does -

- Creating cloud based data platforms that store the data in one place.

- Building automated pipelines that help in moving the data between systems without any manual effort.

- Designing data warehouses

- Developing BI dashboards that show performance clearly.

DataTheta serves businesses across industries such as -

- Pharma and Healthcare

- Retail

- Banking and manufacturing services

- SaaS and technology companies

Min project size - $5,000+

Hourly rate - < $25 / hr

Employees - 50 - 249

Year founded - Founded 2017

2.2) Finarkein Analytics

Finarkein analytics is a big data analytics platform in Pune that is built specially for BFSI industry that is Banking, Financial, Services and Insurance. This company helps the banks and financial institutions in using the data in a smarter as well as faster way in order to improve customer engagement and collections. Finarkein analytics makes it easier for banks in understanding the customer data and in taking better financial decisions using the technology.

What Finarkein does -

- Finarkein provides the analytics platform for the bank where banks can start using it without building everything from scratch.

- Use open data to understand the customers in a better way

- Supports collections and recovery processes.

Key Features of Finarkein Analytics -

- Ready to use APIs for faster data integration

- Designed specifically for banking and financial institutions

- Helps in converting the raw data into meaningful insights

- Supports better decision making

Founded year - 2019

2.3) Talentica Software

Talentica software is a Pune based technology and product engineering company that works closely with startups, enterprises and technology driven businesses. Talentica is best known for product development, it also plays a strong role in data analytics, business intelligence that help the companies in making better decisions. Talentica helps businesses in building smart and analytics solutions that help in turning data into useful insights.

Talentica supports IT and manufacturing businesses by -

- Designing data analytics platform for business insights

- Building BI dashboards and reporting systems

- Working with big data, cloud data platforms and analytics tools

- Help the companies use data for planning, forecasting and performance tracking

Value for IT and Technology Businesses -

- Product analysis and user behavior tracking

- Business Intelligence for growth and revenue

- Cloud based data analytics

Value for manufacturing businesses -

- Track production as well as operational data

- Monitor efficiency and machine performance

- Analyse supply chain and inventory data

Talentica is considered as a strong analytics partner that is located in Pune and is a major IT and manufacturing hub. They have a strong focus on data driven product engineering. They have a good working experience with modern analytics as well as BI technologies.

2.4) SG Analytics

SG analytics is a Pune based data analytics as well as research company that helps the businesses in making better decisions using the data. The company works with global clients and supports industries like IT, Manufacturing, Healthcare etc. SG Analytics companies help the companies in understanding their data clearly, in reducing confusion and making smarter decisions. SG analytics provides end to end analytics and insight services that includes data analytics and business intelligence. They also provide data management and data engineering support, advanced analytics and data science and also consulting services as well as market research.

For the manufacturing companies, SG analytics helps by -

- Analyzing production and operational data

- Tracking efficiency, costs and performance

- Creating dashboards for management reporting.

For IT and Tech businesses, SG analytics support -

- Business Intelligence for sales

- Customer and product performance analytics

- Data driven planning and forecasting

2.5) Rudder Analytics

Ruder analytics is a data analytics and business intelligence company in Pune that helps the organisations in using data in order to make better decisions. The company focuses on converting the raw data as well as unstructured data into clear reports, dashboards and insights that can be easily understood by the business teams. Ruder analytics helps the companies in seeing the actual data and also using it to improve the performance.

Why businesses choose Ruder Analytics -

- Because they have a strong focus on practical, business friendly analytics

- They provide easy to understand dashboards and insights

- Helps the businesses in moving the data from data confusion to clarity

- It is suitable for both IT as well as manufacturing sectors.

Ruder analytics helps the businesses in turning the data into useful information. By providing clear analytics and BI solutions, the company enables IT as well as manufacturing businesses to make smarter decisions, improve performance and also grow with confidence.

2.6) ScatterPie Analytics

ScatterPie Analytics is also a data analytics and Business Analytics company that is located in Pune. This company helps the businesses in understanding data for better decision making by making the data simple, clear and useful for the business teams and not just technical teams. ScatterPie helps the companies in turning the raw data into easily readable reports and dashboards that support everyday business decisions.

ScatterPie supports businesses by -

- Turning raw data into simple charts as well as dashboards

- By helping the leaders in seeing that what is working and what is not

- Connecting the data from different systems at one place

- Supporting better planning and decision making

2.7) Persistent Systems

Persistent Systems is a global technology services and software company that is headquartered in India and has various delivery and hiring centers. It helps the businesses in using data, cloud and digital technologies to improve operations, build smarter products and also to make better decisions. Persistent helps the companies in modernizing their systems and using the data effectively in order to grow and stay competitive.

Persistent works across IT, data and digital transformations including -

- Data Analytics and business Intelligence

- Data Engineering and cloud platforms

- AI and advanced analytics solutions

Persistent System Is a trusted partner because -

- It has a global presence along strong delivery teams in India

- It has a deep expertise in data, cloud and AI technologies

- Focus on practical, business focused solutions.

Read More :- Leading Data Analytics Companies Across India

3. Conclusion

Pune has emerged as one of India’s most important hubs for IT services and manufacturing industries. As digital adoption is rapidly increasing, businesses in these sectors are also generating a large amount of volume from operations, customers as well as digital platforms. The Data Analytics and Business Intelligence companies in Pune play an important role in helping the organisations by converting this data into meaningful insights. These analytics firms support manufacturing businesses by improving production efficiency, reducing downtime, optimizing supply chains, and controlling operational costs. For IT and technology companies, they help track project performance, customer behavior, revenue growth, and product usage through clear dashboards and reports. By choosing the right analytics partner the IT and the manufacturing companies can improve performance, reduce the risks and achieve long growth.

1.Introduction

Coimbatore is one of the fastest growing industrial cities in Tamil Nadu. The city is well known for its strong manufacturing base including textiles, engineering and also machinery production. Similarly, many IT companies and technology startups are also growing here. As the businesses are growing at a rapid speed, they are also generating large amounts of data from machines, sales, supply chains and finance operations. Most companies collect the data and don’t know how to use it, this is where business intelligence and Data Analytics companies play an important role. These firms help the businesses in collecting, cleaning and organising their data. They create simple dashboards, reports as well as visual charts that make the complex information easy to understand, this means that instead of looking at thousands of numbers in the excel sheet, business owners can clearly see the graphs that show performance, profit, losses and trends. For tech businesses, analytics helps in understanding customer behaviour, product usage and website traffic. For manufacturing companies analytics help in tracking production speed, material usage and quality issues. In simple words the data analytics companies and BI companies in Coimbatore help the businesses in making better decisions using facts instead of just guesswork.

2. Top 6 Data Analytics Companies in Coimbatore for Manufacturing & Tech Businesses

2.1) DataTheta

DataTheta is an analytics company with offices in India and the United States. This company helps the businesses in organising the scattered data and turning the data into clear, reliable information that supports decision making. Many companies collect the data from different systems such as ERP, CRM, websites etc. But this data is often disconnected and also difficult to use. DataTheta helps in bringing everything together into one structured and easy to understand system. DataTheta helps in building cloud based platforms in order to store and manage the data securely. They also create automated data pipelines to help the data move smoothly across the systems. They develop BI dashboards and reports for clear performance tracking. They ensure data governance, security as well as compliance.

Industries Served -

- Pharma

- Healthcare

- CPG and retail

- Manufacturing

- SaaS

Some different ways by which companies can work with DataTheta-

- Fixed Project Model - for clearly defined projects

- Developers on demand - skilled experts are available as per the needs

- Managed analytics services - End to End analytics support

Min project size - $5,000+

Hourly rate - < $25 / hr

Employees - 50 - 249

Year founded - Founded 2017

2.2) ClousTech Solutions

ClousTech solutions is a software development company that helps businesses grow by building smart and practical digital solutions. They work closely with clients to turn their ideas into working websites, mobile apps and smart digital systems. Their main goal is to make the technology simple and useful for businesses of all sizes. ClousTech focuses on understanding the needs of a business that helps in improving daily operations, customer experience and also overall performance, instead of offering complicated solutions. They ensure to deliver reliable and modern digital products.

ClousTech provides a wide range of technology services including -

- Web Development - Designing and building professional business websites

- Cloud Migration - Moving the business systems and data to secure the cloud platforms

- Business Intelligence - Creating reports and dashboards for better decision making

- Business Automation - Automating repetitive tasks to save time as well as to reduce errors

- Digital Marketing - Helps the businesses in promoting their brand online.

Founding Year - 2022

Team Size - 2-9

Cost of Services - <$30/h

2.3) Conventus

Conventus is a technology company that helps in building secure and smart automation tools for industries that must follow strict rules and regulations. These industries include sectors such as healthcare, banking, insurance etc. The company focuses on helping the organisations in improving their internal processes using AI driven automation and also ensures that the data is kept safe and compliant along with legal standards. Conventus designs its software in such a way that they can easily connect with a company’s software systems. This means that the business can improve what they already use and there’s no need to replace everything.

Conventus Is Different because -

- It builds secure and compliance focused technology solutions

- They specialises in AI powered automation

- They works well with existing business systems

- They are designed for regulated industries

Founding Year - 2004

Team Size - 50-249

Cost of Services - $30-70/h

2.4) ScienceSoft

ScienceSoft is an IT consulting and software development company that was founded in 1989. This company has more than 750 experts and also works with clients across the United States, Europe and GCC. This company has delivered projects in more than 70 countries and supports more than 30 industries.

Some well known companies that have worked with ScienceSoft are -

- IBM

- eBay

- Ford

- Viber

Industries ScienceSoft works with -

- Healthcare

- Banking

- Manufacturing

- Retail

- Education

ScienceSoft also builds AI solutions such as -

- HIPAA compliant voice assistants

- Automated trading systems

- Computer vision applications

The main mission of ScienceSoft is to drive the project success, no matter what. They ensure project quality and smooth delivery through a strong Management Office and Technology and Competency Center.

Awards and Certifications -

- Listed in IAOP Global Outsourcing 100

- Included in Inc. 500

- Winner of FinTech Futures Banking Tech Award 2024

- ISO 9001 for quality management

- ISO/IEC 27001 for Information Security

- ISO 13485 for media device quality.

Min project size - $5,000+

Hourly rate - $50 - $99 / hr

Employees - 250 - 999

Year founded - Founded 1989

2.5) LatentView Analytics

LatentView Analytics is a fast growing company that serves data analytics as well as business insights. This company helps businesses in understanding their data and using it in order to make better decisions. LatentView focuses on solving real business problems using the data instead of just creating reports. They combine strong knowledge of marketing and customer behaviour with advanced skills in analytics, technology as well as Big Data. They help the companies in improving sales, product performance and overall strategy. LatentView works with many 500 fortune companies and provides analytics services.

Areas of Expertise -

- Customer management

- Digital and social media marketing

- Product analysis

- Data analysis and modeling

- Brand management

LatentView is strong because of the following factors -

- Business-Led Solutions - Focuses on solving the business problems first, understands client goals before building the solutions and maintains open communication with clients

- Strong Analytics Expertise - Dedicated analytics focused company, converts raw data into clear and meaningful insights.

- Delivery excellence - Follows strong project management processes, ensures high quality and consistent results by focusing on timely and reliable delivery.

Min project size - $1,000+

Hourly rate - $150 - $199 / hr

Employees - 250 - 999

Year founded - Founded 2006

2.6) phData Inc.

phData is a big data consulting company that helps the businesses in using their large as well as complex data in a better way. This company is famous for its deep expertise in Hadoop and big data platforms. phData works with many 500 fortune companies and large enterprises in order to build, manage and improve their data systems. phData helps in collecting huge amounts of data, organizing it properly and turning the data into useful information for better decision making. phData takes care of designing the data architecture, monitoring system performance and ensuring reliability, security and scalability.

Companies choose phData because they have a strong expertise in Hadoop and big data technologies, they have a good work experience

With large enterprises, they have worked with fortune 500 companies, they don’t just provide consulting but also end to end support. phData helps the companies handle big data without complexity.

Min project size - $5,000+

Hourly rate - $100 - $149 / hr

Employees - 50 - 249

Year founded - Founded 2014

Related Post:- Ranked List of Data Analytics Companies in India

3. Conclusion

Coimbatore has become an important hub for both manufacturing as well as technology businesses. As these companies are growing at a rapid pace, the data generated is also increasing. This data can be very useful, only if it is properly used and understood. The Data Analytics and Business Intelligence companies in Coimbatore help the businesses in turning raw data into clear and meaningful insights. These companies support the manufacturers by controlling costs and improving quality. For the tech businesses they help to track the customer behaviour, sales performance and product usage. They use dashboards, reports and automated systems through which the decisions become more accurate and faster. Data analytics and business intelligence companies in Coimbatore help the businesses in making decisions that should be based on facts instead of just guesswork. By working with the right analytics partner the tech companies can improve performance, reduce the risks and can grow confidently in today’s market.

1. Introduction

Indore is quickly becoming an important technology hub in central India, especially for mid size IT and Saas companies looking for reliable data analytics partners. Rapid growth of SaaS businesses are generating large amounts of data of products, customers, as well as operational data. The companies need strong support in data engineering, business intelligence and reporting in order to turn the data into meaningful insights. The top IT companies in Indore help IT and SaaS firms in building scalable data systems, improving product performance tracking and making smarter business decisions. Services such as dashboards development, cloud data setups are offered by these firms. In this article we are highlighting the top 06 data analytics companies in Indore that are helping the mid sized IT and SaaS businesses grow through structured and reliable data solutions.

Learn More:- India’s Best Data Analytics Companies Ranked

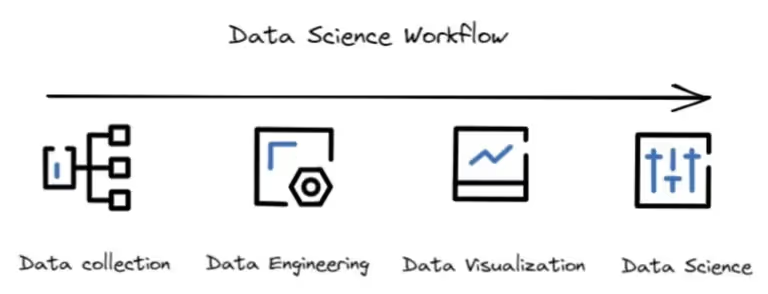

2. What Is Data Analytics Consulting for IT, SaaS Businesses?

Data analytics consulting for IT and SaaS businesses means helping companies in using their data in order to make better decisions. Consultants organise and clean data, build dashboards and set up cloud data systems. They also create reports that show product performance, customer behaviour and revenue trends. Forecasting and automation is also improved using AI and advanced analytics.

3. Top Data Analytics companies in Indore

3.1) DataTheta

DataTheta is a trusted analytics and decision sciences consulting firm that works closely with mid size IT and SaaS companies in order to build strong and scalable data systems. They help the businesses by creating reliable and governed data environments that support product analytics, customer insights etc. instead of just creating dashboards. They have a team of good senior data experts with a solid track record in data analytics delivery. DataTheta enables SaaS and tech firms to use data more effectively for decision-making, performance measurement, and operational improvements.

DataTheta is relevant for IT and SaaS companies because-

- They help in building scalable cloud based data architectures

- They create dashboards for revenue and performance monitoring

- Ensures data security and access control

- Helps to improve the report accuracy using the governed models

Min project size - $5,000+

Hourly rate - < $25 / hr

Employees - 50 - 249

Year founded - Founded 2017

3.2) Fractal Analytics

Fractal Analytics is a global analytics company that helps large enterprises use the data in order to make smarter business decisions. Many fortune 500 companies work with fractal because they see analytics as a strong competitive advantage. Fractal majorly focuses on delivering clear insights, practical innovation etc. through predictive analysis and visual storytelling. This company consists of around 600 professionals that are working across locations such as retail, insurance, technology etc.

What Fractal Analytics does-

- Helps the companies use data to improve the business decisions.

- Builds predictive models to forecast trends and outcomes

- Ensures that the analytics solutions are fully implemented and used.

- Use visual dashboards to make the insights easy to understand.

Min project size - Undisclosed

Hourly rate - $25 - $49 / hr

Employees - 1,000 - 9,999

Year founded - Founded 2000

3.3) Tiger Analytics

Tiger Analytics is a global data analytics and AI consulting company that helps businesses in using data in order to improve performance as well as decision making. Tiger Analytics represents the type of advanced analytics partner that supports growth, customer insights as well as scalable data systems, for mid size IT and SaaS companies. Tiger Analytics focuses on combining business understanding along with strong technical expertise to solve real world problems.

Key strength of Tiger Analytics -

- Strong expertise in AI and machine learning

- Focus on long term analytics adoption and not just reports

- Has a great experience across technology, retail and financial services.

For mid size IT companies and SaaS companies in Indore, firms such as Tiger Analytics set a benchmark for building advanced, reliable and scalable analytics environments.

Min project size - Undisclosed

Hourly rate - Undisclosed

Employees - 10 - 49

Year founded - Founded 2010

3.4) BestPeers

BestPeers is a software development company that helps businesses in building smart, reliable as well as scalable digital solutions. The company focuses on using the modern technologies in a practical way so organisations can improve efficiency, reduce the costs and grow faster in the digital world. BestPeers works with businesses of all sizes and their main goal is to become a long term technology partner, not just developing the software.

BestPeers work with advanced technologies such as -

- Artificial Intelligence

- Blockchain

- Cloud computing

- Full Stack web development

Key services offered -

- UI/UX design and product design

- Enterprise software development

- Mobile App Development

Industries served -

- Healthcare

- eCommerce

- Logistics

- Education

- Finance

BestPeers stands out from the other industries because they have a strong focus on quality and timely delivery. They ensure clear communication and transparent processes. They have an agile development approach and they have a team of skilled developers, designers and consultants working together.

Founding Year - 2017

Team Size - 250-999

Cost of Services - $30-70/h

3.5) NextLoop Technologies LLP

NextLoop Technologies LLP is a software company in Indore that helps the businesses grow through smart and reliable IT solutions. The company mainly focuses on innovation, quality as well as practical technology that is used to solve real business problems. Their goal is to support companies in improving efficiency, increasing productivity etc. NextLoop technologies ensures that the businesses turn their ideas into digital products.

Key Services Offered -

- Custom Software Development - Builds software based on specific business needs and focuses on performance, security and scalability.

- Web and Mobile app development - Develops responsive websites and builds Android and iOS mobile apps ensuring smooth performance.

- IT consulting services and cloud solutions - Offers secure cloud setup and reduces infrastructure costs. Reviews current IT systems and provides clear technology recommendations.

Businesses choose NextLoop Technologies because they provide customized solutions for different industries. They also focus on quality as well as timely delivery. They have a team of skilled developers and consultants that have a strong understanding of modern technology.

Founding Year - 2020

Team Size - 10-49

Cost of Services - $30-70/h

3.6) HData Systems

HData systems is a global Big Data Analytics and Business Intelligence service provider. This company helps businesses in using data in a smart way so that they can grow faster as well as make better decisions. They turn raw data into useful insights using data science and advanced analytics. HData systems support companies by analysing market trends as well as business performance. Their main focus is on helping the clients in improving efficiency and also in increasing the revenue.

- What does HData System does -

- Collects and analyses large volumes of data

- Converts complex data into simple reports and dashboards

- Provide insights in order to improve the business strategies

- Helps the businesses in improving competitor trends

They work with -

- Startups

- Large enterprises

- Mid-sized companies

Services offered -

- UI/UX Design

- AI development

- Data Science solutions

- Big Data analytics

- Data Visualisation

Companies use HData Systems because they have a strong experience in data analytics. They focus on improving ROI and provide reliable and structured data solutions.

Employees - 10 - 49

Hourly Rate - < $25

4. Conclusion

Indore is steadily growing as a strong technology and analytics hub for mid size IT and SaaS companies. As the businesses scale, the need for structured data systems, clear reporting as well as AI driven insights become more necessary. These top 6 analytics companies in Indore are helping Saas and IT firms in moving beyond basic dashboards and in building reliable, secure and scalable data environments. These services offer services such as data engineering, BI dashboards and predictive analysis. They help businesses track key metrics like product performance in a simple and organized way. Choosing the right analytics partner ensures better decision-making, improved operational efficiency, and long-term growth.

1. Introduction

Chennai has grown into one of India's most important centres for data analytics, business intelligence as well as data engineering. Enterprises across industries such as finance, healthcare, retail, manufacturing etc. depend upon analytics teams based in Chennai to support both India focused as well as global operations. As the digital transformation is increasing rapidly, organisations are looking for analytics partners who build reliable data pipelines that do not break as the data volume grows. They should design BI platforms where business teams must trust the numbers and should support both structured as well as unstructured data. Chennai gets differentiated because it combines strong engineering talent and cost efficient delivery. This article highlights the top 10 data analytics firms in Chennai that offer end to end AI, BI and data engineering services as well. These firms help the businesses improve planning, track performance accurately and support better decision making.

Related Post:- Best data analytics companies in India

2. What Is Data Analytics in AI, BI & Data Engineering?

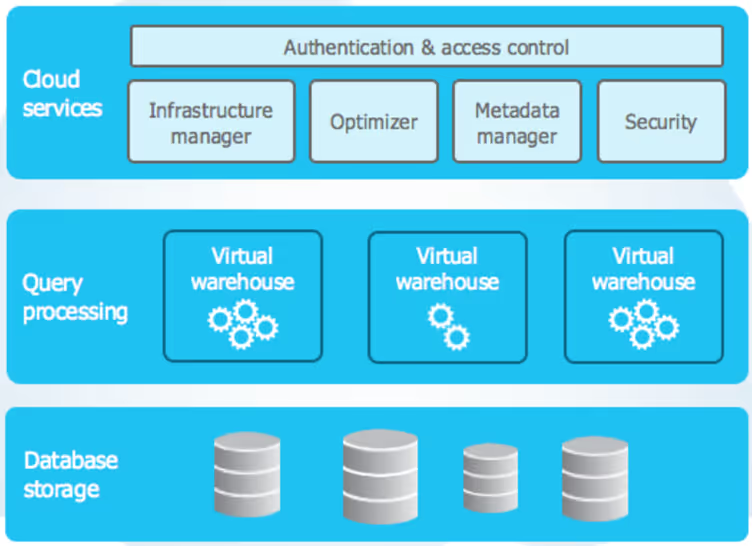

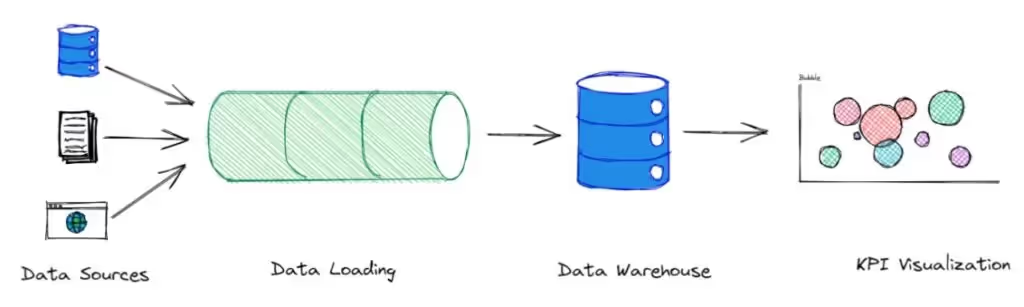

Data analytics basically means turning raw data into useful information that helps the businesses in making better decisions. This process includes collecting data from different systems, cleaning and organising the data and then analysing the data to understand what is happening and what is going to happen next. When data engineering, business intelligence, AI and advanced analytics are combined together, it becomes much more powerful. Data engineering mainly focuses on building reliable data pipelines. It ensures that the data is collected, processed and made available. Business Intelligence turns this prepared data into reports and dashboards that can be used by the teams to track performance and also to review results. AI and advanced analytics use the same data to create forecasting models that help the businesses in faster response and to plan ahead. When all these three sectors come together then they build scalable data architectures that grow with the businesses.

3. Top 10 Data Analytics Firms in Chennai

3.1 DataTheta

DataTheta is a Chennai based leading data analytics consulting company that is helping enterprises across industries like Pharma, Healthcare, Retail/CPG, Energy, and BFSI. The Chennai team at DataTheta specializes in transforming fragmented enterprise data into unified, analytics-ready platforms. It further supports faster decision-making and measurable business outcomes. The company provides end-to-end services including Data Analytics, Business Intelligence, Data Engineering & Warehousing, Data Science, and GenAI solutions. DataTheta is known for its strong focus on governance, compliance, security and scalability. The DataTheta team of senior data engineers & scientists brings together more than 10 years of average experience. DataTheta combines industry expertise with flexible engagement models, some of them are- fixed-time projects, managed services, and developers-on-demand (also known as fixed time resource). This approach helps the businesses to modernize their analytics capabilities efficiently along with properly maintaining control over cost and better performance.

3.2 Fractal Analytics

Fractal Analytics is a Chennai based, well-established data analytics company that has well-developed analysis, forecasting, and measurement of activities. The company helps both SMB and large enterprises in the medical field, financial services, retail and technology sector. It provides a wide range of analytics tools and modeling solutions. Fractal analytics in Chennai provides services that are categorized into data preparation, the creation of machine learning models, and the final deployment of analytics. Fractal analytics follow an result oriented approach that is aimed at providing key insights for proper business planning processes. Clients trust on Fractal analytics when they require some help with the performance evaluation systems, trend analysis and scalable reporting solutions that are reliable and in accordance with the enterprise requirements.

3.3 LatentView Analytics

LatentView Analytics is another well known multinational data analytics company that has a solid presence in Chennai. It works in the sectors such as retail, finance, technology, and consumer goods. LatentView also provides high level business analytics services like customer-segments, predictive modeling, optimization methods, and data engineering services. LatentView is a consulting firm in Chennai that uses technical expertise and consulting experience to help the organizations in developing analytics processes that are linked to business planning cycles. Its solutions are fully customized to enhance the accuracy of reporting, expose the useful patterns, and better the overall decision support between functions. LatentView works with quantifiable analytics results as well as reporting systems that help in long-term performance monitoring.

3.4 Tiger Analytics

When the enterprises need an analytics system to connect business metrics across teams rather than operating as isolated dashboards, they go for Tiger Analytics. Instead of building dashboards, this company in Chennai focuses on making sure that the numbers behind those dashboards are correct and consistent and can be easily trusted across the business. They help the enterprises in defining right business KPIs and keeping the data pipelines stable so that the reports don’t break. They also monitor the data issues early and use SQL and Python to transform raw data into something usable.

3.5 Tredence

Tredence is one of the best top data analytics services providers in Chennai and it is also a popular data science and analytics advisory firm. They provide data engineering, analytics model implementation, and forecasting systems offered by the company to help their clients in improving the accuracy of the planning and performance evaluation. Tredence collaborates with every type of business to implement analytics processes & solutions that can be used to achieve quantifiable results and performance data. Tredence provides its services to consumer goods, health and technology industries. Its strength lies in the well structured delivery and clarity of insights that are often praised by organizations.

3.6 Absolutdata

Absolutdata provides customer analytics and behavioral modeling and data based decision support consulting and services to Chennai based clients. The company provides the features of segmentation analysis, data preparation, predictive models, and performance reporting dashboard. Absolutdata helps the businesses from different industries like retail sector, financial services and technology in improving their understanding of customer behaviour and quantifying results in the key performance areas. Its services facilitate unceasing enhancement of analytics solutions and the augmented view of performance indicators. Absolutdata has a strong delivery presence that makes it a preferred analytics partner for global enterprises. They have a highly skilled analytics talent, provide cost-effective delivery, strong domain expertise, and deep expertise in providing scalable project execution on time.

3.7 Genpact

Genpact is another well known name in providing top notch analytics solutions in Chennai. It helps in building scalable & robust data analytics programs which improves the optimal operational measurements, risk assessment and reporting systems. Genpact key services include data preparation, implementation of analytics, and performance tracking systems which helps the enterprises. The Genpact analytics team in Chennai works in emerging sectors such as finance, supply chain, and compliance sectors where reliability and sound measurement is needed. Genpact helps enterprise businesses to transform via data analytics, artificial intelligence (AI), cloud technologies, and automation. They have clients from different industries like finance, retail, healthcare, supply chain, and manufacturing.

3.8 Cognizant Analytics

Cognizant is another leading global information technology and data consulting company that has offices in Chennai. It further serves to help in preparing data, analytics workflow implementation, and performance evaluation. The experienced team at Cognizant Chennai help businesses to create analytics models, combine reporting systems and enhance the delivery of insights. Cognizant works with healthcare, banking, logistics, and retail clients and helps them to consolidate analytics practices and improve the accuracy of the planning. Cognizant offers a wide range of analytics and data-driven services to solve their clients' business problems.

3.9 WNS Analytics

WNS Analytics is a Chennai based data analytics company that gives proper performance measurement solutions. This includes- predictive modelling, reporting structures, and trend analysis. The firm serves clients in the retailing, insurance and healthcare industries. WNS Analytics' experienced team utilizes proper domain experience and organized analytics operations that helps the organizations in improving the visibility of performance and proper accuracy of reporting at functional levels. WNS analytics integrates data, analytics, AI, and human expertise to help businesses extract key important insights, modernize data infrastructure, and enable smarter decision making. WNS utilizes AI and analytics with domain expertise to deliver business outcomes rather than just dashboards.

3.10 Deloitte Analytics

Deloitte’s analytics in Chennai usually works with large enterprises that operate complex, regulated or global data environments where data issues can quickly turn into business risks. Their main goal is to help the organisations in bringing structure and consistency to analytics at scale. Deloitte in Chennai supports enterprises in building secure and governed data pipelines as well as aligning KPIs across the teams. Deloitte’s analytics is capable of using unified and governed BI layers in order to eliminate KPI conflicts, securing data pipelines for regulated environments etc. When enterprises need long term operational use, they choose Deloitte. Their main strength is in building governance frameworks that remain stable.

4. Pricing and Selection Criteria for Right Data Analytics & BI Companies In Chennai

4.1 Pricing

Pricing for the data analytics and BI services mainly depends upon what you need, how complex your data is and how long the work will run. Some firms charge for a fixed scope project that works well when the requirements are clear. Others work on a monthly model that means the teams support ongoing improvements, reporting and fixing. To avoid conflicts, there should be clear deliverables, timelines as well as ownership from the start.

4.2 Client Reviews & Case Experience

Client reviews and case experience help you to understand how reliable a data analytics or a BI firm actually is. Testimonials don’t only show the building of dashboards, but it also shows whether there is timely delivery by the firm or not, whether the firm solved the real business problems or not. Case studies are equally important as they show what problem was addressed, how the data was used and what results were achieved. When a client returns for more work, it’s usually a sign of consistent delivery as well as good work, so one should also look for repeat engagements.

4.3 Industry Domain Expertise

Industry domain expertise plays a crucial role in deciding the usefulness of analytics outcomes, that means the analytics partner already understands how your business works. When the consulting partner already understands the business domain, they don’t need long explanations about basic terms, data sources and workflows. For example, a company experienced in healthcare is familiar with patient data as well as clinical operations. This familiarity helps in faster decision making and more relevant insights.

4.4 Data Security & Governance

Data security and governance means keeping your data safe, controlled and trustworthy while it is being used for analytics and BI. This is more important when the data includes customer details, financial records etc. A good analytics partner always explains how the data is protected at every step. Security also includes access controls. Governance always focuses on how the data is defined and used, and supports audits and tracking showing the actual source of data and how it changes.

4.5 Technical Capability

Technical capability means the analytics firm has the right tools and skills to handle the data properly from the beginning to the ending. A strong firm manages data engineering that means the data is collected from different systems, cleaned and gets prepared for analysis. They should also be good at building dashboards that are easy to understand as well as reliable. Compatibility with platforms like AWS, Snowflake, Azure is also very necessary because many platforms use these tools.

4.6 Long-Term Support

Analytics is not a one time setup. A firm with long term support monitors data pipelines, fixes failures and ensures that the reports show the right KPIs. Good partners also help in tracking performance, improving speed and adapting analytics when new data sources or users are added. Long term support helps in supporting real business decisions.

5. Conclusion

The data analytics ecosystem in Chennai is still growing at a rapid pace with more enterprises needing to find the structured analytics support, prediction systems, and performance measurement tools. In the above article, I have listed some of the reliable companies in Chennai that provide data analytics services like data engineering, predictive modeling, reporting structures, consulting etc. They have prior experience in working with small & medium size (SMB) and large scale enterprises in solving their business problems using the help of advanced technology stack. You can check these analytics companies capabilities, pricing structure, data engineer experience & expertise and take a final call while selecting the best data analytics partner in India for your business.

1. Introduction

Hyderabad has now become one of India's strongest hubs for data analytics, data engineering, BI, and AI execution especially for IT services and technology based businesses. Nowadays tech organisations are generating data from many sources such as through customer platforms, ERPs, Billing systems and cloud data warehouses. Even after having all this data the teams are still struggling to transform this data into meaningful insights. Some common challenges include things like quite breaking of data pipelines without any alert, driving up cloud costs due to slow queries, schema changes that cause dashboards to fail etc. Due to these reasons the companies want partners who take responsibility for making analytics work consistently, not for the analytics vendors who just build dashboards. The modern enterprises in Hyderabad choose an analytics firm that can keep the data pipelines reliable as well as monitored, ensure security of cloud data environments, ensure that KPIs mean the same thing across the teams etc. Nowadays analytics must support product planning, customer intelligence, cost control and long term decision ownership, they want all these things without confusion. This article highlights the top 10 data analytics companies in Hyderabad that can be easily trusted by the businesses when analytics need systems to scale and also to support real business planning.

Related Post:- Top data analytics services firms in India

2. What Is Data Analytics in Hyderabad?

Data analytics means using data to understand what is happening and to make better decisions. This process includes collecting data, cleaning data and then studying it to find patterns as well as trends. Organisations use data analytics services in Hyderabad for better planning, predicting future outcomes, tracking performance and reducing risks. It also supports things like dashboards, track performance, cloud data platforms and business reports. If we understand it simply, then data analytics turn raw data into clear information that helps the teams and leaders in making better as well as informed decisions.

3. Top 10 Best Data Analytics Companies in Hyderabad

3.1 DataTheta

DataTheta is a decision sciences and analytics consulting firm that is headquartered in Texas USA, and has delivery centres in Hyderabad. When the enterprises need reliable, governed as well as scalable analytics environments, and not just dashboards, they choose DataTheta. DataTheta focuses on building analytics systems that help the teams for daily planning and decision making. This includes cloud platforms, SQL and Python pipelines, Continuous monitoring of data quality etc. When enterprises across IT, Saas, Healthcare and industrial sectors look for companies that support real planning cycles, and not just reporting, they choose DataTheta.

3.2 Evalueserve

Evalueserve is another well known name in providing top notch analytics solutions in Hyderabad. It helps in building scalable & robust data analytics programs which improves the optimal operational measurements, risk assessment and reporting systems. Key services of Evalueserve include data preparation, implementation of analytics, and performance tracking systems which helps the enterprises. The Evalueserve team works in emerging sectors such as finance, supply chain, and compliance sectors where reliability and sound measurement is needed. Evalueserve helps enterprise businesses to transform via data analytics, artificial intelligence (AI), cloud technologies, and automation. They have clients from different industries like finance, retail, healthcare, supply chain, and manufacturing.

3.3 Gramener

Gramener is an India based analytics company headquartered in Hyderabad that helps the organisations in understanding the data through clear visuals, machine learning models as well as cloud based analytics systems. Gramener focuses on making data easy to see, understand and act on. They build dashboards that help to track the business performance, models that predict the outcomes, and also the analytics system that supports daily planning operations. This firm mainly works with industries such as banking, manufacturing, retail, etc. This company is mainly famous for visual intelligence as well as practical analysis as they turn complex data into insights that can be easily seen by the business teams.

3.4 Sutherland Analytics

Sutherland analytics is a part of Sutherland, a global services company that helps the large organizations in running and managing analytics at a scale. From its Hyderabad delivery teams, it supports business with forecasting models, secure data platforms and machine learning systems. Sutherland doesn't only build the analytics systems, they also make sure that analytics keep running smoothly everyday. They mainly work with industries such as insurance, healthcare, logistics where data systems must always be available and reliable. When the enterprises need a partner to own analytics end to end and to handle the ongoing reporting they choose Sutherland. Sutherland ensures that the data systems continue to support decisions over time.

3.5 Merilytics

Merilytics is an India based analytics and AI company that is headquartered in Hyderabad. It helps the businesses plan better and see the performance clearly using data, machine learning models as well as automated reports. Merilytics helps the companies in understanding customers, predicting future demands, and in tracking business performance through dashboards as well as analytics systems. They also build cloud data pipelines that keep the data flowing smoothly and also models that support accurate forecasting. Merilytics works with sectors such as finance, retail and healthcare organisations where good planning and visibility is clear. When the enterprises seek for reliable analytics in order to improve decision making and planning accuracy, they go for Merilytics.

3.6 Tiger Analytics

When the enterprises need an analytics system to connect business metrics across teams rather than operating as isolated dashboards, they go for Tiger Analytics. Instead of building dashboards, this company focuses on making sure that the numbers behind those dashboards are correct and consistent and can be easily trusted across the business. They help the enterprises in defining right business KPIs and keeping the data pipelines stable so that the reports don’t break. They also monitor the data issues early and use SQL and Python to transform raw data into something usable.

3.7 SG Analytic

SG analytics is one of the best data analytics and research service companies that works with global enterprises to turn data into clear insights and also business usable insights. SG analytics has a delivery and hiring presence in Hyderabad that supports analytics work for clients. SG analytics helps the companies in understanding their data and in making better decisions. Their team works on data analytics, business intelligence, advanced analytics etc. They also ensure that their outputs are more business focused and not just technical. SG analytics helps industries such as healthcare, retail, technology etc. When the enterprises need reliable analytics support and ongoing reporting they often go for SG analytics.

3.8 Accenture Analytics

Accenture is a popular name in offering global business and analytics services through technology, transformation, innovation. Accenture provides advanced level data analytics services to enterprise clients of Hyderabad in terms of data platform strategy, analytics implementation and reporting systems. The company operates in areas like the technological, medical, financial, and production industry. Accenture services are used by organizations to easily consolidate the data sources, enhance the accuracy of predictions, and support decision-making processes in the context of digital transformation initiatives. The team at Accenture utilizes a broader data & AI practice with primary focuses on turning data into insights that drive decision making, improve performance, and enable digital transformation.

3.9 EY Analytics

EY analytics is a part of EY (Ernst and Young). It helps large organisations in using data in a structured, secure and well governed way. The Hyderabad team of EY supports businesses with forecasting models, cloud data platforms and reporting systems. Ey helps the companies in setting up analytics in the right way so that the numbers remain consistent, trusted and also aligned with the business planning. They also focus on data governance that means they set clear rules on how data is collected, used, secured as well as reported. The enterprises that need analytics to match planning cycles, financial reporting etc., choose EY. This firm helps the businesses and the organisations in building reliable, secure and also well governed analytics systems that can be easily trusted by the leaders for planning as well as for daily decision making.

3.10 PwC Analytics

PwC analytics is a part of PwC (PricewaterhouseCoopers). They help large organisations in using data for planning and tracking performance clearly. The Hyderabad team of PwC supports the business with forecasting, reporting, cloud data systems etc. PwC helps the companies to collect data, organise data and turn it into clear insights and reports that can be easily trusted by the leaders. They also support AI and advanced analytics and also make sure that the data is secure and follow the governance rules. PwC works with industries such as healthcare, retail, finance and manufacturing where accurate data is critical.

4. Pricing and Selection Criteria to select the right data analytics company in Hyderabad

1. Pricing Model-

Pricing for the data analytics and BI services mainly depends upon what you need, how complex your data is and how long the work will run. Some firms charge for a fixed scope project that works well when the requirements are clear. Others work on a monthly model that means the teams support ongoing improvements, reporting and fixing. To avoid conflicts, there should be clear deliverables, timelines as well as ownership from the start.

2. Client Reviews -

Client reviews help you to understand how reliable a data analytics or a BI firm actually is. Testimonials don’t only show the building of dashboards, but it also shows whether there is timely delivery by the firm or not, whether the firm solved the real business problems or not. When a client returns for more work, it’s usually a sign of consistent delivery as well as good work, so one should also look for repeat engagements.

3. Industry Expertise -

Industry domain expertise plays a crucial role in deciding the usefulness of analytics outcomes, that means the analytics partner already understands how your business works. When the consulting partner already understands the business domain, they don’t need long explanations about basic terms, data sources and workflows. For example, a company experienced in healthcare is familiar with patient data as well as clinical operations. This familiarity helps in faster decision making and more relevant insights.

4.Data Security -

Data security and governance means keeping your data safe, controlled and trustworthy while it is being used for analytics and BI. This is more important when the data includes customer details, financial records etc. A good analytics partner always explains how the data is protected at every step. Security also includes access controls. Governance always focuses on how the data is defined and used, and supports audits and tracking showing the actual source of data and how it changes.

5.Technical Capability -

Technical capability means the analytics firm has the right tools and skills to handle the data properly from the beginning to the ending. A strong firm manages data engineering that means the data is collected from different systems, cleaned and gets prepared for analysis. They should also be good at building dashboards that are easy to understand as well as reliable. Compatibility with platforms like AWS, Snowflake, Azure is very necessary because many platforms use these tools.

5. Conclusion

The data analytics ecosystem in India is still growing at a rapid pace with more enterprises needing to find the structured analytics support, prediction systems, and performance measurement tools. In the above article, I have listed some of the reliable companies in India that provide data analytics services like data engineering, predictive modeling, reporting structures, consulting etc. They have prior experience in working with small & medium size (SMB) and large scale enterprises in solving their business problems using the help of advanced technology stack. You can check these analytics companies capabilities, pricing structure, data engineer experience & expertise and take a final call while selecting the best data analytics partner in India for your business.

.jpg)

1. Introduction

In the past couple of years, Bangalore has established itself as a big hub in the market for providing high quality data analytics and consulting services. It is attracting both local and international companies that are looking for advanced-level analytics solutions to better analyse their business and for growth. The use of data is still very important for the organisation’s to properly plan, measure performance and strategise the future decision making. With digital platforms having been adopted rapidly at large scale, today companies are heavily investing in analytics based solutions that are more than simple reporting, for better performance analysis, and for high level operational assistance. This has pushed the demand for the analytics partners in Bangalore to help in the structured engineering of data, predictive modelling, analytics implementation, and regular reporting frameworks. In this blog, I will share with you an in-depth list of the 10 best data analytics companies in Bangalore on the basis of their key services, customer feedback, experience in the industry, and analytics performance results. This will help you to select the right data analytics services provider in Bangalore that solves your business problems and helps in achieving more revenue for your business.

Related Post:- Leading data analytics service providers in India

2. What Is Data Analytics in Bangalore?